Generative Intents

Why intents are hard today

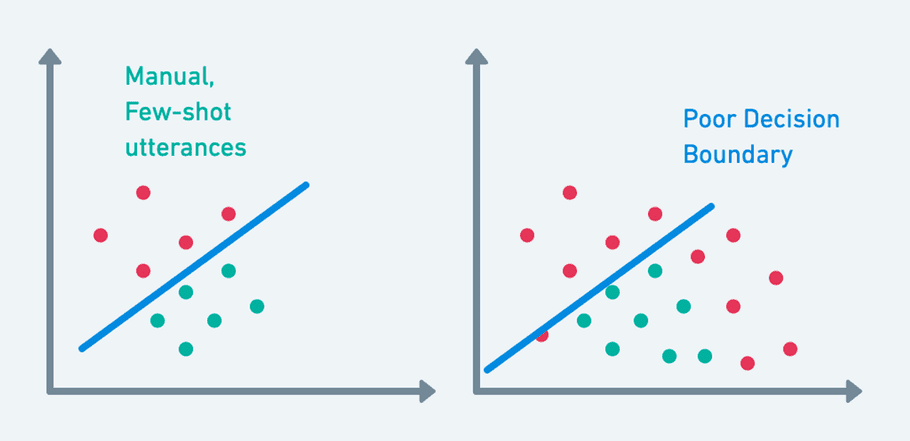

One of the hardest things with today's toolkits is coming up with good, diverse “sample utterances” for training intents. Many toolkits promise "few shot learning," but their performance rarely holds up in production.

This is because: 1. development examples don't mirror production 2. development examples are too similar

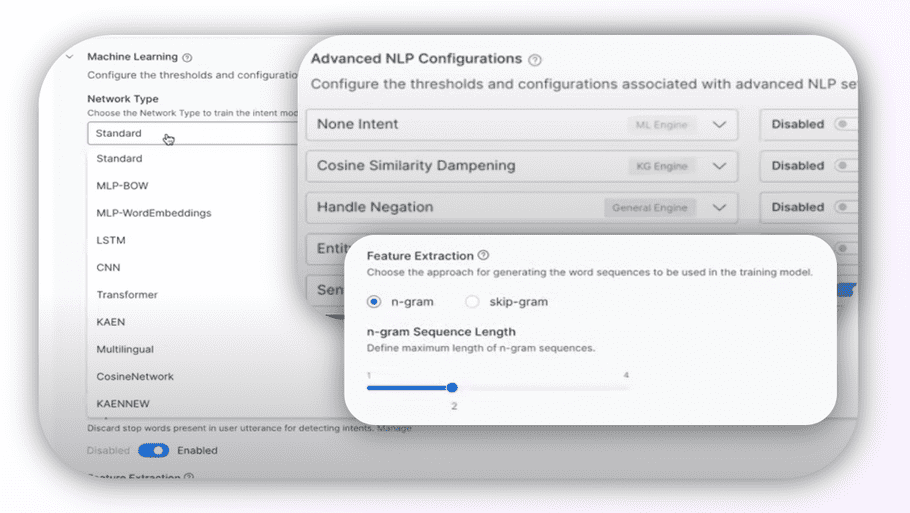

Because the underlying data pipeline is broken, those same toolkits give you "controls" where you try you luck, guessing at different configurations until something seems good enough.

All of this is just Not AI. It's a broken process that we took the responsibility to solve.

Our solution: Generative Intents

Instead of relying on a flawed data pipeline to build intents, Moveworks builds a generative intent for your creators, on-the-fly.

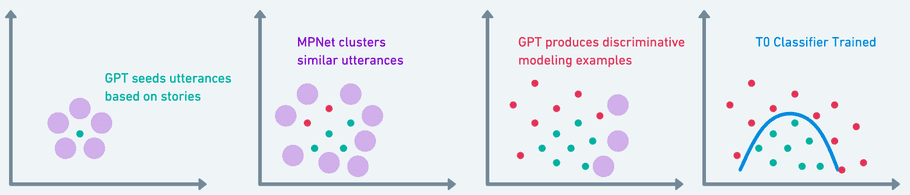

First, we use all the Path data provided by the example stories, and use

First, we use all the Path data provided by the example stories, and use GPT to generate synthetic utterances that make sense for these stories.Second, we use an in-house enterprise-language clustering model called MPNet to retrieve similar utterances from your production traffic.Third, we combine those production utterances with GPT to produce samples that are near your decision boundary. All you do is tell us if we're getting warmer or colder.

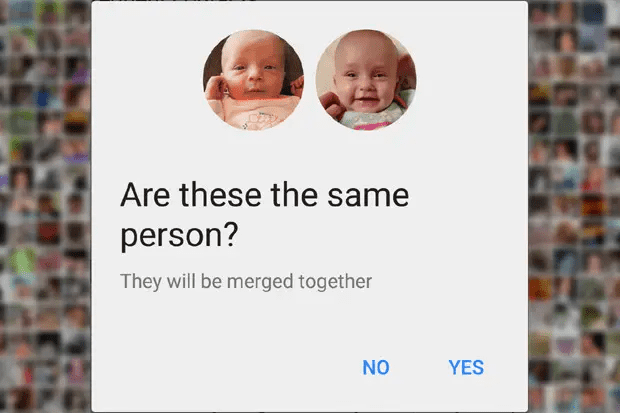

This is a technique called discriminative modeling. It's similar to what happens in Google Photos when it shows you a picture of your friend and asks “Is this the same person 4-5 times, and then it knows how to identify that person in 1000 more pictures.

Fourth, we tune a

Fourth, we tune a T0 classifier, which is zero-shot, generative LLM with 11B parameters. T0 is based on Flan-T5 which outperforms GPT on domain classification tasks. After tuning, we continuously benchmark performance to give you the best enterprise language model experience.