Assistant vs Classic

Creator Studio experiences are significantly upgraded in Assistant compared to Classic (legacy). View differences for each workspace:

Paths

Assistant's generative framework brings reasoning to your Paths use cases. Your use cases receive the following benefits:

- Implicit slot detection

Classic

Classic bot needs to ask each question explicitly.

Assistant

Assistant auto can detect slots from previous utterances & ask explicitly when needed.

- Handle multiple actions

Classic

Classic bot presents multiple actions, user can only pick 1 at a time.

Assistant

Assistant can conduct multiple logical actions at once.

- Easy context switching

Classic

Classic bot requires stepping outside the use case to switch context.

Assistant

Assistant allows context switching between use cases & native skills. Users can pick up where they left of.

- Improved dialogs

Classic

Classic bot solely relies on the use case configuration to generate dialogs.

Assistant

Assistant generates dialogs on-the-fly. It uses in-chat context & knowledge of your organization to be more specific.

- Conversation reversibility

Classic

Once you answer a question, you need to restart the flow to modify it.

Assistant

Assistant allows switching responses to previous questions.

Queries

- Better Query filtering

Classic

Classic bot always presents the entire Query response verbatim.

Assistant

Assistant is able to apply basic filters to the Query response on-the-fly.

- Better Query summarization

Classic

Classic bot always presents the entire Query response.

Assistant

Assistant summarizes the relevant sections of a Query.

Assistant Implications

As you move to a more conversational, generative AI experience, you'll need to prepare for how plugins will behave differently:

- Plugin design: Assistant reasons using the names, descriptions & configuration of your plugin. This means high-quality & unique names for your plugin, slots, actions are important. Please review the best practices guide for building with Assistant.

- Limited context window: LLMs are subject to context window limitations, the maximum query response size that our platform can support is 8k tokens. For reference, 1 token = 4 characters, so for APIs, that’s typically going to be ~32k bytes (or 32kB) with ± 5% accuracy. If your query response exceeds 8k tokens, it will not work in Assistant.

Adaptive Responses

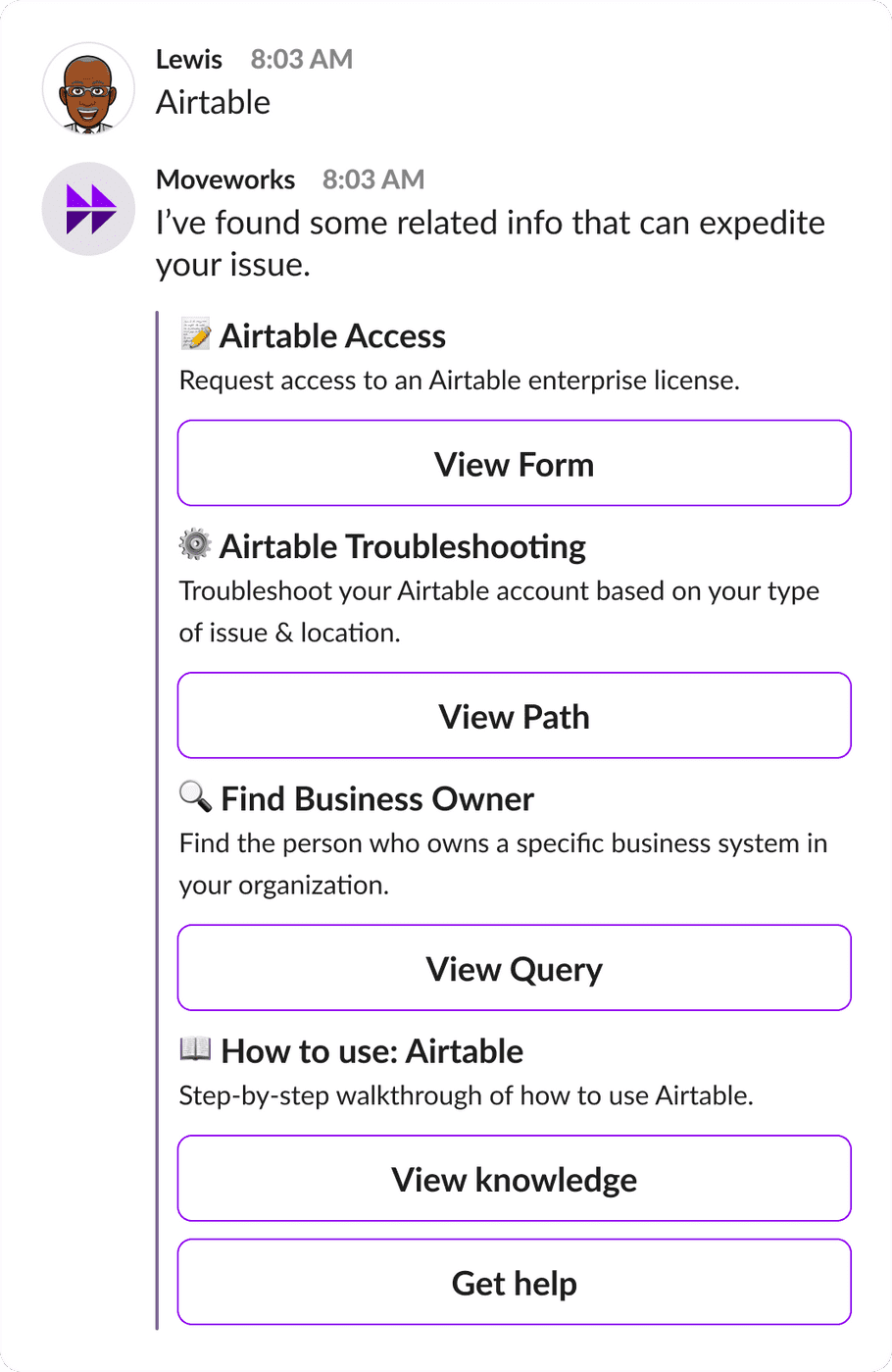

Sometimes the bot is confident a single plugin (Query, Article, Path, Form, etc.) will solve a problem. But often, questions have complex answers where multiple plugins may be relevant.

In such situations, Adaptive Response presents multiple relevant plugins to unclear employee requests. That way employees can select the most relevant plugin.Assistant

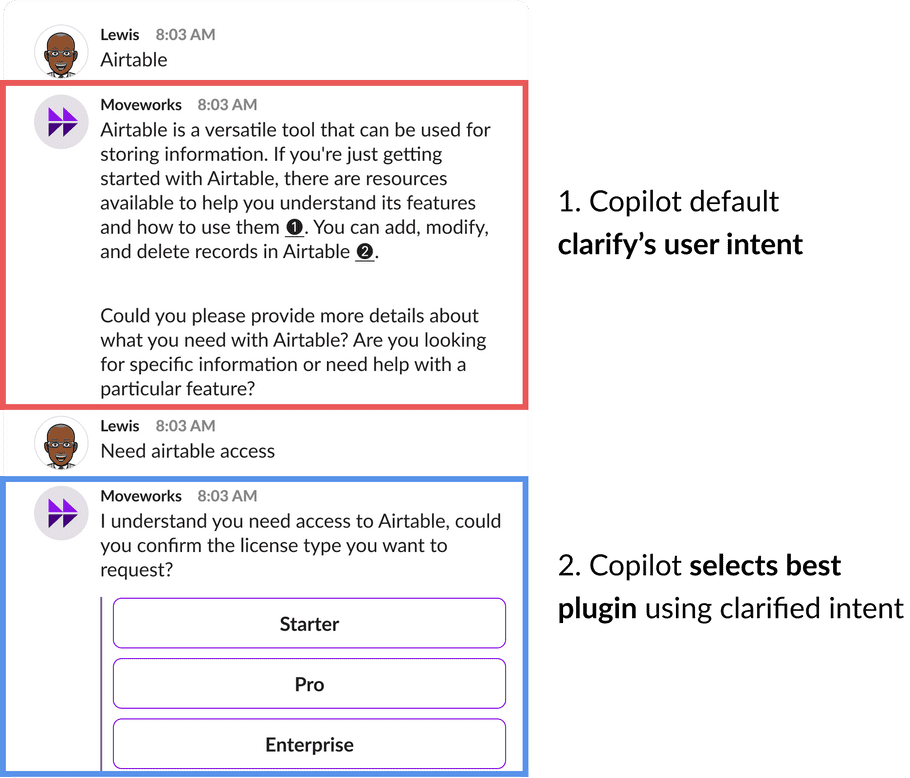

Assistant default tries to clarify intent & find the most relevant plugin.

Classic

Classic shows list of relevant plugins for user to pick from.

This experience is only available for Classic.

However, Assistant is able to disambiguate plugins by default. If you would like to further improve this disambiguation experience for certain plugins, contact your Customer Service team for ideas.